result_automation_system

Automated College Result Scraper

The “RGPV Result Scraper” is a Python script designed to automate the process of extracting student result data from the Rajiv Gandhi Proudyogiki Vishwavidyalaya (RGPV) website. This script enables users to retrieve results for specific branches of study and semesters, subsequently saving the scraped data into CSV files for further analysis or record-keeping.

Key Features:

-

Web Scraping: The script utilizes web scraping techniques to access and extract information from the RGPV result portal.

-

Captcha Handling: It automates the entry of captchas using Optical Character Recognition (OCR) with Tesseract, enabling the bypassing of security measures.

-

Customization: Users can select their desired branch of study and semester before initiating the scraping process, allowing flexibility in result retrieval.

-

Data Export: The extracted result data, including student roll numbers, names, SGPA, CGPA, and results, is organized and saved in CSV files.

Overview

This Python script automates the process of scraping student result data from the Rajiv Gandhi Proudyogiki Vishwavidyalaya (RGPV) website. The script allows you to retrieve and store student results for specific branches and semesters in a CSV file.

Table of Contents

- Automated College Result Scraper

YouTube Video Tutorial

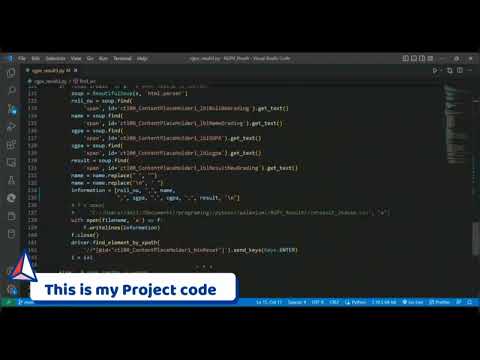

You can watch the video tutorial on how to use this project by clicking on the following image:

Prerequisites

Before using this script, make sure you have the following dependencies installed:

- Python 3.x

- Microsoft Edge WebDriver (msedgedriver.exe)

- Required Python libraries (can be installed via pip):

- selenium

- requests

- Pillow (PIL)

- BeautifulSoup4 (bs4)

- pytesseract

- pandas (for data manipulation, optional)

Getting Started

-

Clone the repository to your local machine:

git clone https://github.com/devnamdev2003/result_automation_system.git -

Navigate to the project directory:

cd result_automation_system -

Install the required Python libraries:

pip install -r requirements.txt

Usage

-

Edit the

main()function in theproject.pyscript to customize the scraping parameters such as the branch, semester, and starting roll number. -

Ensure that you have the

msedgedriver.exeWebDriver executable placed in the project directory. -

Run the script:

python project.py -

The script will start scraping student results and save them to CSV files in the project directory.

Adapting the Code

To adapt this code for your college, follow these steps:

- Edit

project.py:- Open the

project.pyscript in a text editor or code editor of your choice. - Modify the code in the

main()function to match your college’s website structure. Specifically, adjust the following:- The URL of your college’s result page.

- The HTML elements and XPaths used for interacting with the web page.

- The logic for handling captcha and extracting result data.

- Open the

- Customize Parameters:

- Customize parameters like the branch number, semester, starting roll number, and any other relevant information specific to your college.

- Test the Code:

- Test the modified code with a few sample roll numbers to ensure it works correctly for your college.

Configuration

You can customize the following parameters in the main() function of project.py:

branch: Enter the branch number according to your college’s branch codes.sem: Set the semester for which you want to scrape results.- Starting roll number (

i) and the roll number format (en_num) should match your college’s enrollment number format.

Installing Tesseract OCR

Tesseract OCR is an open-source optical character recognition engine that is widely used for text recognition in images. To use Tesseract OCR in your project, follow these steps to install it on your system:

Step 1: Download and Install Tesseract OCR

- Windows:

- Download the Tesseract OCR installer for Windows from the Tesseract GitHub releases page. Look for the latest version suitable for your system (e.g.,

tesseract-ocr-w64-setup-vX.X.X.exe). - Run the installer and follow the installation instructions. Make sure to select the option to add Tesseract to your system’s PATH during installation.

- Download the Tesseract OCR installer for Windows from the Tesseract GitHub releases page. Look for the latest version suitable for your system (e.g.,

- Linux (Debian/Ubuntu):

-

Open a terminal and run the following commands to install Tesseract:

sudo apt update sudo apt install tesseract-ocr sudo apt install libtesseract-dev

-

- macOS (Homebrew):

- If you don’t have Homebrew installed, install it by following the instructions at Homebrew.

-

Open a terminal and run the following command to install Tesseract:

brew install tesseract

Step 2: Verify the Installation

To verify that Tesseract OCR is installed correctly, open a terminal or command prompt and run the following command:

tesseract --version

You should see the Tesseract OCR version information, indicating that the installation was successful.

Step 3: Using Tesseract OCR

You can now use Tesseract OCR in your Python scripts or applications. Make sure to install the pytesseract Python library, which provides a Python wrapper for Tesseract OCR. You can install it using pip:

pip install pytesseract

Refer to the pytesseract documentation for information on how to use Tesseract OCR with Python.

Step 4: Update the path

- Set the path to Tesseract executable. Replace this path with your Tesseract installation path.

-

Example: If Tesseract is installed at “C://Program Files//Tesseract-OCR//tesseract.exe”, set it as follows:

tesseract_path = r"C://Program Files//Tesseract-OCR//tesseract.exe"

def read_text(captcha_image):

tesseract_path = r"E://Program Files//Tesseract-OCR//tesseract.exe"

# Rest of your code...

Workflow

-

Import Libraries:

The script starts by importing necessary Python libraries, including those for web scraping, web automation, image processing, and file handling.

-

Helper Functions:

find_src(source_code): This function parses the HTML source code of a web page to locate and extract the URL of the captcha image.download_image(url, image_name): Downloads the captcha image from a given URL and saves it locally with the specified name.read_text(captcha_image): Reads text from a captcha image using Tesseract OCR, cleans the extracted text, and deletes the image file.

-

Main Function (

main()):- User Input:

- The user is prompted to choose a branch of study by entering a number (e.g., Computer Science, Mechanical Engineering, etc.).

- The user is prompted to enter a semester number (hardcoded as “6” in this script).

- Browser Automation:

- A WebDriver for Microsoft Edge is initiated (or the browser of your choice) for web automation using Selenium.

- An implicit wait is set for element locating (0.5 seconds).

- Website Navigation:

- The script navigates to the RGPV result page (http://result.rgpv.ac.in/Result/ProgramSelect.aspx).

- It selects the appropriate program (radlstProgram_1), which corresponds to the program for undergraduate results.

- Loop Over Roll Numbers:

- Inside a while loop, the script performs the following steps repeatedly:

- Enrollment Number Generation

- Captcha Image Download

- Captcha Text Extraction

- Form Filling and Submission

- Inside a while loop, the script performs the following steps repeatedly:

- Handling Scenarios:

- The script handles three possible scenarios after form submission:

- Correct Captcha

- Enrollment Number Not Found

- Wrong Captcha

- The script handles three possible scenarios after form submission:

- User Input:

-

Main Execution:

- Finally, the script checks if it’s being run as the main program (

if __name__ == "__main__":) and calls themain()function to start the scraping process.

- Finally, the script checks if it’s being run as the main program (

Note

-

Ensure you have Microsoft Edge WebDriver (or the appropriate WebDriver for your browser) installed and set the

edge_driver_pathvariable in the script to its location. -

Make sure you have the correct branch and semester selected before running the script.

- The script may require adjustments if the structure of the RGPV result page changes.

- This project’s flexibility and functionality make it a valuable tool for academic institutions or individuals seeking to automate the retrieval of student result data from the RGPV website. It is essential to keep the script up to date with any changes in the structure of the result portal to ensure its continued effectiveness.

Happy scraping!

License

This project is licensed under the MIT License - see the LICENSE file for details.

Acknowledgments

- This script was developed as part of a college project to automate result retrieval.

- Thanks to the open-source community for providing tools and libraries used in this project.

Feel free to contribute to the project or report issues on the GitHub repository.